Pawel Pabich's blog

Random thoughts on computer things I'm passionate about.

Thursday, 7 November 2024

Octopus Cloud architecture

Thursday, 28 January 2016

Time in distributed systems

Time in a program that runs on a single machine and uses local physical clock is already a tricky concept. Just think about time zones, daylight saving and the fact they can change at any time because they are governed by politicians. Distributed programs add one more dimension to this problem space because there isn’t a single clock that decides what time it is.

The goal of this post is to create a short summary of some of the academic papers written on this topic and in the process to better understand the challenges faced by the builders of distributed systems. I hope I won’t be the only person that will benefit from it :).

As end users we take the notion of time for granted and rely on our physical clocks to determine whether event A happened before event B. This works great most of the time because in our day-to-day lives we don’t really need high precision time readings. The fact that my watch is running a minute or two late comparing to some other watch doesn’t really matter all that much. Computer systems are different because they operate at much higher speed and even small variations can affect the after-before relationship. The clocks that are used in computers nowadays tend to drift enough that distributed systems can’t really rely on them. At least not as the only source of truth when it comes to time.

To solve this problem Lasslie Lamport introduced an idea of virtual time that can be implemented using logical clocks (Time, Clocks, and the Ordering of Events in a Distributed System). His algorithm implements an invariant called Clock Condition: if event A happened before event B then the value of logical clock when A happened is lower than the value of logical clock when B happened, in short If A –> B then C(A) < C(B). This invariant provides one of many possible, consistent partial orderings of events in a distributed system. The drawback of the ordering established based on Clock Condition is that C(A) < C(B) doesn’t mean A happened before B. Moreover, as far the end user is concerned B might even have happened before A or might have happened concurrently with A.

Vector Clocks, introduced by Colin J. Fidge (Timestamps in Message-Passing Systems That Preserve the Partial Ordering), fill the shortcoming mentioned above by providing an invariant that is a reverse of Clock Condition: if C(A) < C(B) then A –> B. Vector Clock is built on top of logical clock designed by Lasslie Lamport. The main difference is that instead of using just one logical clock for the whole system it uses one logical clock per process in the system. In this way it tries to capture distributed state of time in the system. Vector Clock stores a list of values so the original invariant should be rewritten to if VC(A) < VC(B) then A –> B where VC(A)[i] <= VC(B)[i] and j exists that VC(A)[j] < VC(B)[j]. E.g. [1,2,3] < [1,2,4]. If neither A->B nor B->A (eg. VC(A) = [1,2,1] and VC(B) = [2,1,2]) then A and B are considered to be concurrent events.

Even with Vector Clocks we can end up with concurrent events that from the end user perspective are not concurrent. To mitigate this problem we can make a full circle and employ help from physical clocks or we can simply let the user order the events for us. This is how Riak deals with write conflicts. It accepts concurrent writes to the same key but then the conflict needs to be resolved at the read time.

The first step towards fixing a problem is to realize that there is a problem and this is what Vector Clocks provide because they can detect conflicts. If you don’t think this is a big deal then follow Kyle Kingsbury’s Jepsen series on distributed systems correctness to see how many mainstream (database) systems can lose data and not know about it.

Wednesday, 9 September 2015

Random lessons learnt from building large scale distributed systems, some of them on Azure.

This post is based on more than one project I’ve been involved in but it was triggered by a recent project where I helped build an IoT system that is capable of processing and storing tens of thousands of messages a second. The system was built on top of Azure Event Hubs, Azure Web Jobs, Azure Web Sites, HDInsight implementation of HBase, Blob Storage and Azure Queues.

As the title suggests it is a rather random set of observations but hopefully they all together form some kind whole :).

Keep development environment isolated, even in the Cloud

A fully isolated development environment is a must have. Without it developers step on each others toes which leads to unnecessary interruptions and we all know that nothing kills productivity faster than context switching. When you build an application that uses SQL Server as a backend you would not think about creating one database that is shared by all developers because it’s relatively simple to have an instance of SQL Server running locally. When it comes to services that live only in the Cloud this is not that simple and often the first obvious solution seems to be to share them within the team. This is the preferred option especially in places that are not big on automation.

Another solution to this problem is to mock Cloud services. I strongly recommend against it as it will hide a lot of very important details and debugging them in CI (or UAT or Production) is not an efficient way of working.

If sharing is bad then then each developer could get a separate instance of each Cloud service. This is great in theory but it can get expensive very quickly. E.g. the smallest HBase cluster on Azure consists of 6 VMs.

The sweet spot seems to be a single instance of a given Cloud service with isolation applied at the next logical level.

- HBase – table(s) per developer

- Service Bus - event hub per developer

- Azure Search – index(es) per developer

- Blob Storage – container per developer

- Azure Queues – queue(s) per developer

Each configuration setting that is developer specific can be encapsulated in a class so the right value is supplied to the application automatically based on some characteristic of the environment in which the application is running, e.g. machine name.

Everything can fail

The number of moving parts increases with scale and so they are chances of one or more of them failing. This means that every logical component of the system needs to be able to recover automatically from an unexpected crash of one or more of its physical components. Most of PaaS come with this feature out of the box. In our case the only part we had to take care of was the processing component. This was relatively easy as Azure lets you continuously run N instances of a given console app (Web Job) and restarts them when they crash or redeploys them when the underlying hardware is not healthy.

When the system recovers from a failure it should start where it stopped which means that it possibly needs to retry the last operation. In a distributed system transactions are not available. Because of that if an operation modifies N resources then all of them should be idempotent so retries don’t corrupt the state of the system. If there is a resource that doesn’t provide such a guarantee then it needs to be modified as the last one. This is not a bullet proof solution but it works most of the time.

- HBase – writes are done as upserts

- Event Hub - checkpointing is idempotent

- Blob Storage – writes can be done as upserts

- Azure Queues – sending messages is NOT idempotent so the de-duplication needs to be done on the client side

Sometimes failure is not immediate and manifests itself in a form of an operation running much longer than it should. This is why it is important to have relatively short timeouts for all IO related operations and have a strategy that deals with them when the occur. E.g. if latency is important then a valid strategy might be to drop/log data currently being processed and continue. Another option is to retry the failed operation. We found that the default timeouts in the client libraries where longer than what were comfortable with. E.g. default timeout in HBase client is 100 seconds.

Don't lose sight of the big picture

It is crucial to have access to an aggregated view of logs from the whole system. I can’t imagine having tail running for multiple components and doing the merge in my head. Tools like Seq are worth every cent you pay for them. Seq is so easy to use that we used it also for local development. Once the logging is setup I recommend spending 15 minutes a day watching logs. Well instrumented system tells a story and when the story does not make sense you know you have a problem. We have found several important but hard to track bugs just by watching logs.

And log errors, always log errors. A new component should not leave your dev machine unless its error logging is configured.

Ability to scale is a feature

Every application has performance requirements but they are rarely explicitly stated and then the performance problems are discovered and fixed when the application is already in Production. As bad as it sounds in most cases this is not a big deal and the application needs only a few tweaks or we can simply throw more hardware at it.

This is not the case when the application needs to deal with tens of thousands of messages a second. Here performance is a feature and needs to be constantly checked. Ideally a proper load test would be run after each commit, the same way we run CI builds, but from experience once a day is enough to quickly find regressions and limit the scope of changes that caused them.

Predictable load testing is hard

Most CI builds run a set of tests that check correctness of the system and it doesn’t matter whether the tests run for 0.5 or 5 or 50 seconds as long as all assertions pass. This means that the performance of the underlying hardware doesn’t affect the outcome of the build. This is not true when it comes to load testing where slower than usually hardware can lead to false negatives which translate to wasted time spent on investigating problems that don’t exist.

In ideal world the test would run on isolated hardware but this is not really possible in the Cloud which is a shared environment. Azure is not an exception here. What we have noticed is that using high spec VMs and running load test at the same time of the day in the morning helped keep the performance of the environment consistent. This is a guess but it looks like the bigger the VM the higher the chance for that VM to be the only VM on its host. Even with all those tweaks in place test runs with no code changes would differ by around 10%. Having load testing setup from the beginning of the project helps spot outliers and reduce the amount of wasted time.

Generating enough of correct load is hard

We started initially with Visual Studio Load Test but we didn’t find a way to fully control the data it uses to generate load. All we could do was to generate all WebRequests up front which is a bit of problem at this scale. We couldn’t re-use requests as each of them had to contain different data.

JMeter doesn’t suffer from this problem and was able to generate enough load from just one large VM. The templating language is a bit unusual but it is pretty powerful and at the end of the day this is what matters the most.

JMeter listeners can slow down significantly the load generator. After some experimentation we settled on Summary Report and Save Responses to a file (only failures) listeners. They gave us enough information and had very small impact on the overall performance of JMeter.

The default batch file limits JMeter heap size to 512MB which is not a lot. We simply removed the limit and let the JVM to pick one for us. We used 64 bit JVM which was more than happy to consume 4GB of memory.

Don’t trust average values

Surprising number of tools built for load testing shows average as the main metric. Unfortunately average hides outliers and it is a bit like looking at 3D world through 2D glasses. Percentiles is a much better approach as they show the whole spectrum of results and help make an informed decision whether it’s worth investing more in performance optimization. This is important because performance work never finishes and it can consume infinite amount of resources.

Feedback cycle on performance work is looooooooooooong

It is good to start load testing early as in most cases it is impossible to make a change and re-run the whole load test locally. This means each change will take quite a bit of time to be verified (in our case it was 45 minutes) . In such a case it is tempting to test multiple changes at once but it is a very slippery slope. In a complex system it is hard to predict the impact a given change will have on each component so making assumptions is very dangerous. As an example, I sped up a component responsible for processing data which in turn put more load on the component responsible for storing data which in turn made the whole system slower. And by change I don’t always mean code change, in our case quite a few of them were changes to the configuration of Event Hub and HBase.

Testing performance is not cheap

Load testing might require a lot resources for relatively short period of time so generally it is a good idea to automatically create them before the test run and then automatically dispose them once the test run is finished. In our case we needed the resources for around 2h a day. This can be done with Azure though having to use three different APIs to create all required resources was not fun. I hope more and more services will be exposed via Resource Manager API.

On top of that it takes a lot of time to setup everything in a fully automated fashion but if the system needs to handle significant load to be considered successful then performance related work needs to be part of the regular day-to-day development cycle.

Automate ALL THE THINGS

I’m adding this paragraph for completeness but I hope that by now it is obvious that automation is a must when building a complex system. We have used Team City, Octopus Deploy and a thousand or so lines of custom PowerShell code. This setup worked great.

Event Hubs can scale

Event Hubs are partitioned and the throughput of each partition is limited to 1k messages a second. This means that Event Hub shouldn’t be perceived as a single fat pipe that can accept any load that gets thrown at it. For example, an Event Hub that can in theory handle 50k messages a second might start throwing exceptions when the load reaches 1k messages a second because all messages happen to be sent to the same partition. That’s why it’s very important to have as even distribution of data that is used to compute PartitionKey as possible. To achieve that try a few hashing algorithms. We used SHA1 and it worked great.

Each Event Hub can have up to 20 independent consumer groups. Each consumer group represents a set of pointers to the message stream in the Event Hub. There is one pointer per partition. This means that there can be only one Event Hub reader per partition per consumer group.

Let’s consider an Event Hub with 50 partitions. If the readers need to do some data processing then a single VM might not be enough to handle the load and the processing needs to distributed. When this happens the cluster of VMs needs to figure out which VM will run readers for which partitions and what happens when one of the readers or/and VMs disappears. Reaching consensus in a distributed system is a hard problem to solve. Fortunately, Microsoft provided us with Microsoft.Azure.ServiceBus.EventProcessorHost package which takes care of this problem. It can take couple of minutes for the system to reach consensus but other than that it just works.

HBase can scale

HBase is a distributed data base where data is stored in lexicographical order. Each node in the cluster stores an ordered subset of the overall data. This means that data is partitioned and HBase can suffer from the same problem as the one described earlier in the context of Event Hubs. HBase partitions data using row key that is supplied by the application. As long as row keys stored at more or less the same time don’t share the same prefix then the data should not be sent to the same node and there should be no hots spots in the system.

To achieve that the prefix can be based on a hash of the whole key or part of it. The successful strategy needs to take into account the way the data will be read from the cluster. For example, if the key has a form of PREFIX_TIMESTAMP_SOMEOTHERDATA and in most cases the data needs to be read based on a range of dates then the prefix values needs to belong to a predicable and ideally small set of values (e.g. 00 to 15). The query API takes start and end key as input so to read all values between DATE1 and DATE2 we need to send a number of queries to HBase which equals the number of values the prefix can have. In the sample above that would be 16 queries.

HBase exposes multiple APIs. The one that is the easiest to use is REST API called Stargate. This API works well but its implementation on Azure is far from being perfect. Descriptions of most of the problems can be found on the GitHub page of the SDK project. Fortunately, Java API works very well and it is actually the API that is recommended by the documentation when the highest possible level of performance is required. From the tests we have done Java API is fast, reliable and offers predictable latency. The only drawback is that it is not exposed externally so HBase and its clients need to be part of the same VNet.

BTW Java 8 with lambdas and streams is not that bad :) even though the lack of var keyword and checked exceptions are still painful.

Batch size matters a lot

Sending, reading and processing data in batches can significantly improve overall throughput of the system. The important thing is to find the right size of the batch. If the size is too small then the gains are not big and if the size too big then then a change like that can actually slow down the whole system. It’s taken us some time to find the sweat spot in our case.

- Single Event Hub message has a size limit of 256 KB which might be enough to squeeze multiple application messages in it. On top of that Event Hub accepts multiple messages in a single call so another level of batching is possible. Batching can also be specified on the read side of the things using EventHostProcessor configuration.

- HBase accepts multiple rows at a time and its read API can return multiple rows at a time. Be careful if you use its REST API for reading as it will return all available data at once unless the batch parameter is specified. Another behaviour that took as by surprise is that batch represents the number of cells (not rows) to return. This means a single batch can contain incomplete data. More details about the problem and possible solution can be found here.

Web Jobs can scale

Azure makes it very easy to scale up or down the number of running instances. The application code doesn’t have to be aware of this process but it might if it is beneficial to it. Azure notifies jobs before it shuts them down by placing a file in their working folder. Azure Web Jobs SDK comes with useful code that does the heavy lifting and all our custom code needed to do is to respond to a notification. We found this very useful because Event Hub readers lease partitions for a specific amount of time so if they are not shutdown gracefully the process of reaching distributed consensus takes significantly more time.

Blob Storage can scale

We used Blob Storage as a very simple document database. It was fast, reliable and easy to use.

Tuesday, 12 May 2015

Trying to solve a real world problem with Azure Machine Learning

I’ve spent last couple of days playing with Azure Machine Learning (AML) to find out what I can do with it. To do so I came up with an idea that might or might not be useful for our recruitment team. I wanted to predict whether a given candidate would be hired by us or not. I pulled historical data and applied a few statistical models to it. The end result was not as good as I expected it to be. I guess this was caused by the fact that my initial dataset was too small.

How to start

I’ve done some ML and AI courses as part of my university degree but that was ages ago :) and I needed to refresh my knowledge. Initially I wanted to learn about algorithms so I started reading Doing Data Science but it was a bit too dry and I needed something that would be focused more on doing than theory. Recently released Microsoft Azure Essentials: Azure Machine Learning was exactly what I was looking for. The book is easy to read and explains step by step how to use AML.

Gathering initial data is hard

For some reason I thought that once I have data extracted from the source system it should be easy to use it. Unfortunately this was not true as the data was inconsistent and incomplete. I spent significant amount of time filling the gaps and sometimes even removing data when it did not make sense in the context of the problem I was trying to solve. At the end of the whole process it turned out that I removed 85% of my original dataset. This made me question whether I should be even using it. But this was a learning exercise and nobody was going to make any decisions based on it so I didn’t give up.

Data cleansing was a mundane task but at the end of it I had a much better understanding of the data itself and relationship between its attributes. It’s a nice and I would say important side effect of the whole process. Looking at data in its tabular form doesn’t tell the full story so as someone mentioned on the Internet it is important to visualize it.

AML Studio solves this problem very well. Every dataset can be quickly visualized and its basic statistical characteristics (histogram, mean, median, min, max, standard deviations) are displayed next to the charts. I found this feature very useful. It helped me spot a few mistakes in my data cleansing code.

Trying to predict the future

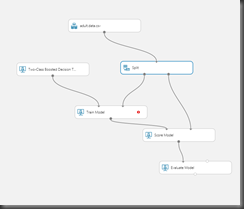

Once the data has been cleaned I opened AML Studio which is an online tool that lets you design ML experiments. It took me only a couple of minutes to train a Two-Class Boosted Decision Tree model. To do it in AML Studio you need to take the following steps:

- Import data

- Split data into two sets: training set and test set

- Pick the model/algorithm

- Train the model using the training set

- Score the trained model using the test set

- Evaluated how well the the model has been trained.

Each of the steps is represented by a separate visual element on the design surface which makes it very easy to create and evaluate experiments.

I tried a few more algorithms but none of them gave me better results. This part was more time consuming than it should be. Unfortunately at the moment there is no API that would allow me to automate the processes of creating and running experiments. Somebody already mentioned that as a possible improvement so there is a chance it will be added in the future: http://feedback.azure.com/forums/257792-machine-learning/suggestions/6179714-expose-api-for-model-building-publishing-automat. It would be great to automatically evaluate several models with different parameters and then pick the one that gives best predictions.

But it is Drag & Drop based programming!

In most cases Drag & Drop based programming demos well, sells well but has serious limitations when it comes to its extensibility and maintainability. “Code” written in this way is hard or impossible to test and offers poor or no ability to reuse logic so “copy and paste” approach tends to be used liberally. At first glance AML seems to belong to this category but after taking a closer look we can see that there is light at the end of the tunnel.

Each AML experiment can be exposed as an independently scalable HTTP endpoint with very well defined input and output. This means that each of them can be easily tested in isolation. This solves the first problem. The second problem can be solved by keeping the orchestration logic outside of AML (e.g. C# service) and treating each AML endpoint as a pure function. It this way we let AML do what it is good at without introducing unnecessary complexity of implementing “ifs” visually.

I’ve trained the perfect model, now what ?

As I mentioned in the pervious paragraph, the trained mode can be exposed as a HTTP endpoint. On top of that AML creates an Excel file that is a very simple client build on top of the HTTP endpoint. As you type parameters the section with predicted values get refreshed. And if you want to share your awesome prediction engine with the rest of the world you can always upload it to AML Gallery. One of the available APIs there is a service that calculates person age based on their photo.

Sunday, 1 June 2014

How to run Selenium tests in parallel with xUnit

I’m a big fan of automated testing, unfortunately end to end tests, like the ones that use Selenium, have always been a problem. They are slow and their total execution time very quickly goes beyond of what most teams would accept as a reasonable feedback cycle. On my current project our Full CI build takes nearly 2 hours. Every project of a significant size I know of, has this problem, some of them have 4h+ builds. Assuming you have only one test environment then you can test up to 4 changes a day. Not a lot when daily deployments to Production are the ultimate goal. There are many solutions to this problem but most of them are based on splitting the list of tests into smaller subsets and executing them on separate machines (e.g. Selenium grid). This works but from my experience has two major drawbacks:

- it is complex to setup and gather results

- it can’t be replicated on a single machine, which means

- I can’t debug easily tests that fail only because they are run together with some other tests

- I can’t quickly run all tests locally to make sure my changes do not break anything

Another added benefit of running tests in parallel is that it becomes a very basic load test. This isn’t going to be good enough for sites that need to handle thousands of concurrent users, but it might be just what you need when the number of users is in low hundreds.

In the past I used MbUnit with VS 2010 and it worked great. I was able to run 20 Firefox browsers on a single VM. Unfortunately the project seems to be abandoned and it doesn’t work with VS 2013. Until recently, none of the major testing frameworks supported running tests in parallel. As far as I remember even xUnit 2.0 initially wasn’t meant to support this feature but this has changed and now it does.

So where is the catch? After spending some time with xUnit code my impression is that the core of xUnit and newly added parallelism were built with unit testing in mind. There is nothing wrong with this approach and I fully understand why it was done this way but it makes running tests that rely on limited resources (e.g. browser windows) challenging.

The rest of the blog posts focuses on how to overcome those challenges. A sample app that implements most of the described ideas is available on GitHub: https://github.com/pawelpabich/SeleniumWithXunit. The app is based on custom fork of xUnit: https://github.com/pawelpabich/xunit.

How to run tests in parallel

To execute tests in the sample app in parallel in VS 2013 (tested with Update 2) you need to install xUnit runner for VS and Firefox. Once this is done, compile the app, open Test Explorer (TEST –> Windows), select all tests and run them. You should see two browsers open at more or less the same time.

WARNING: If you want to run Selenium tests on a machine which has text size set to any value that is not 100% stick to Selenium 2.37.0 and Firefox 25 as this the only combo that works well in such a setup. This problem should be solved in the next version of Selenium which is 2.42.0 https://code.google.com/p/selenium/issues/detail?id=6112

xUnit unit of work

xUnit executes in parallel test collections. Test Collection is a group of tests that either belong to a single class or single assembly. Tests that belong to a single test collection are executed serially. Collection behaviour can be specified in VS settings or as a global setting in code via CollectionBehviour attribute. On top of that we have ability to control the level of concurrency by setting MaxParallelThreads. This threshold is very important as a single machine can handle only limited number of browsers.

At the moment a single test can’t become a test collection which means that a class with a few long running tests can significantly extend the total execution time, especially if it happens to be run at the end of a test run. I added this feature to my fork of xUnit: https://github.com/pawelpabich/xunit/commit/7fa0f32a1f831f8aef55a8ff7ee6db0dfb88a8cd and the sample app uses it. Both tests belong to the same class yet there are executed in parallel.

Isolate tests, always

Tests should not share any state but tests that run in parallel simply must not do it. Otherwise it is like asking for trouble which will lead to long and frustrating debugging sessions. The way this can be achieved is to make sure that all state used by a test is created in the constructor of the test and it gets disposed once the test is finished. For this we can use Autofac and create a new LifetimeScope for each test which then gets disposed when the test object gets disposed. xUnit doesn’t support IoC so we need to inject all dependencies using property injection in the constructor which is not a big deal.

Shared resources, yes, they exist

Technically speaking each test could launch a new instance of Firefox but this takes quite a bit of time and can be easily optimized. What we need is a pool of browsers where tests can take browsers from and return them to once they are done with them. In most cases shared resources can be initialized once per test run. xUnit doesn’t have a concept of global initialization so that’s why the test run setup happens in the constructor of the base class that all other tests inherit from. This isn’t a great solution but it works. You might be able to move it to the static constructor as long as the setup code doesn’t use Threads and Tasks because they will cause deadlocks. Lack of global initialization means that there is a no global clean up either, but this can be worked around by subscribing to AppDomain.Unload event and performing clean up there. From experience this works most of the time so I would rather have a proper abstraction in the framework.

Don't block synchronously

When MaxParallelThreads is set to a non zero value then xUnit creates a dedicated Task Scheduler with limited number of threads. This works great as long as you use async and await. But if you need to block synchronously you might cause a deadlock as there might be no free threads to execute the continuation. In such a case the safest way is to execute the code on the Default .NET Task Scheduler.

I need to know when something goes wrong

If a test implements IDisposable then xUnit will call Dispose method when the test is finished. Unfortunately this means the test doesn’t know whether it failed or succeeded which is a must have for browser based tests because we want to take a screenshot when something went wrong. That’s why I added this feature to my fork of xUnit.

What about R#, I won’t code without it!

There is an alpha version of xUnit plugin for R#. It runs xUnit tests fine but it doesn’t run them in parallel at the moment. But this might change in the future so keep an eye on https://github.com/xunit/resharper-xunit/pull/1.

Async, async everywhere

Internally xUnit uses async and await extensively. Every piece of the execution pipeline (Create test object –> Execute Before Filter –> Execute test –> Execute After Filter –> Dispose test) can end up as a separate Task. This doesn’t work well with tests that rely on limited resources because new tests can be started before already finished tests are disposed and their resources returned to a shared pool. In such a case we have two options. Either the new tests are blocked or there will be more resources created which is not always a viable option. This is why in my fork of xUnit the whole execution pipeline is wrapped in just one Task.

What about Continuous Integration

There is a console runner that can output Team City service messages and this is what I use. It works great, and the only complain I have is that TeamCity doesn’t display well messages coming concurrently from multiple different flows in its web interface. This should be fixed in the future http://youtrack.jetbrains.com/issue/TW-36214.

Can I tell you which tests to execute first?

In ideal world we would be able to run failed test first (e.g we can discover that via API call to the build server, thanks Jason Stangroome for the idea) and then the rest of the tests ordered by their execution time in descending order. xUnit lets us order tests within single test collection but there is no abstraction to provide custom sort order of test collections which is what I added to my fork. The orderer is specified in the same way a test orderer would be.

It works but it has some sharp edges

All in all I’m pretty happy with the solution and it has been working great for me over last couple of weeks. The overall execution time of our CI build dropped from 1:41h to 21 min.

The work is not finished and I hope that together we can make it even easier to use and ideally make it part of the xUnit project.I started a discussion about this here (https://github.com/xunit/xunit/issues/107). Please join and share your thoughts. It’s time to put the problem of slow tests to bed so we focus on more interesting challenges :).

Tuesday, 1 January 2013

How to run Hadoop on Windows

The easiest way to start is to download a preconfigured VMware image from Cloudera. This is what I did and it worked but it did not work well. The highest resolution I could set was 1024x768. I installed the VMware client tools but they did not seem to work with the Linux distribution picked by Cloudera. I managed to figure out how to use vim to edit text files but a tiny window with flaky UI (you can see what is happening inside Hadoop using a web browser) was more that I could handle. Then I thought about getting it working on Mac OS X which is a very close cousin of Linux. The installation process is simple but the configuration process is not.

So I googled a bit more and came across Microsoft HDInsight which is Microsoft distribution of Hadoop that runs on Windows and Windows Azure. HDInsight worked great for me on Windows 8 and I was able to play with 3 most often used query APIs: native Hadoop Java based map/reduce framework, Hive and Pig. I used Word count as a problem to see what each of them is capable of. Below are links to sample implementations:

- Java map/reduce framework – run c:\hadoop\hadoop-1.1.0-SNAPSHOT\bin\hadoop.cmd to get into command line interface for Hadoop

- Pig – run C:\Hadoop\pig-0.9.3-SNAPSHOT\bin\pig.cmd to get into Grunt which lets you use Pig

- Hive – run C:\Hadoop\hive-0.9.0\bin\hive.cmd to get into Hive command line interface

As far as I know Microsoft is going to to contribute their changes back to the Hadoop project so at some stage we might get Hadoop running natively on Windows in the same way nodejs is.

Thursday, 18 October 2012

Quick overview of TFS Preview

- It was very easy to configure automated deployments to Azure

- The performance of web portal ranged from acceptable to painfully slow.

- The UI is decent and it’s easy to execute all the basic tasks like add user story, add bug, look up a build

- Burndown chart worked out of the box

- Scrum board is simple and does the job

- Builds would take up to couple of minutes to start even if the build queue was empty

- Total build time was a bit unpredictable, ranged from 60s to 160s for the same code.

- Adding support for nUnit was more hassle than I anticipated

- Story boarding in PowerPoint is not integrated with TFS so you can’t push files directly from PowerPoint to TFS

- There is no Wiki

- Build log is as “useful” as it was in TFS 2010